Siri icon in a datacenter

Apple announced new AI language models at WWDC. These models run both locally on Apple devices and on Apple’s own Apple Silicon-powered AI servers.

Artificial Intelligence (AI) relies on language models which provide knowledge input to train AI to produce results for prompts (queries).

Using language models, computers can be trained in specific subjects to act as domain experts on certain topics.

AI alignment refers to the process of designing and implementing AI systems so that they conform to human goals, values, and desired outcomes. In other words, alignment is intended to keep AI on task and not become dangerous by straying from its original purpose.

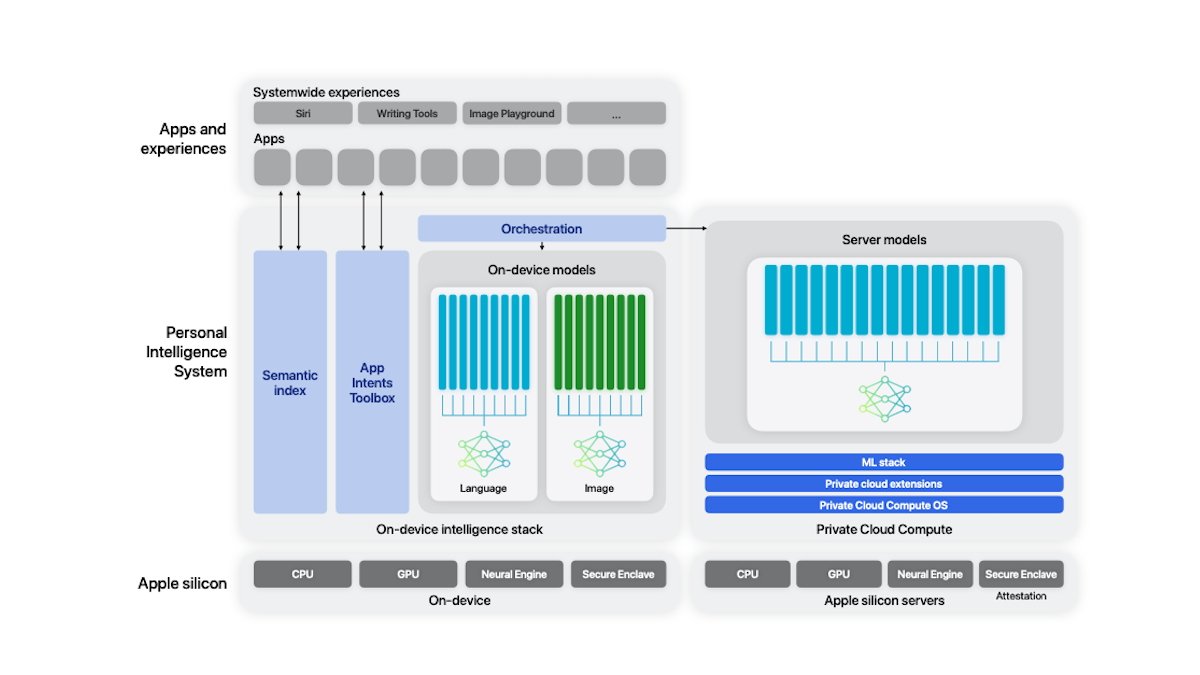

At WWDC 2024, Apple announced Apple Intelligence – Apple’s own AI which will provide both on-device and server-based AI. By using new models in Apple Intelligence, Apple’s AI will become more focused, faster, and more accurate.

Foundation language models

Apple calls its general generative AI models foundation language models. These models are Large Language Models (LLMs), which use up to 3 billion parameters, and are designed for basic generative AI which most users might want to use.

Apple Foundation Models.

Apple calls these two models AFM-on-device, and AFM-on-server respectively.

Apple also has other general-purpose models built into Apple Intelligence. These models can run both on-device and on Apple’s servers.

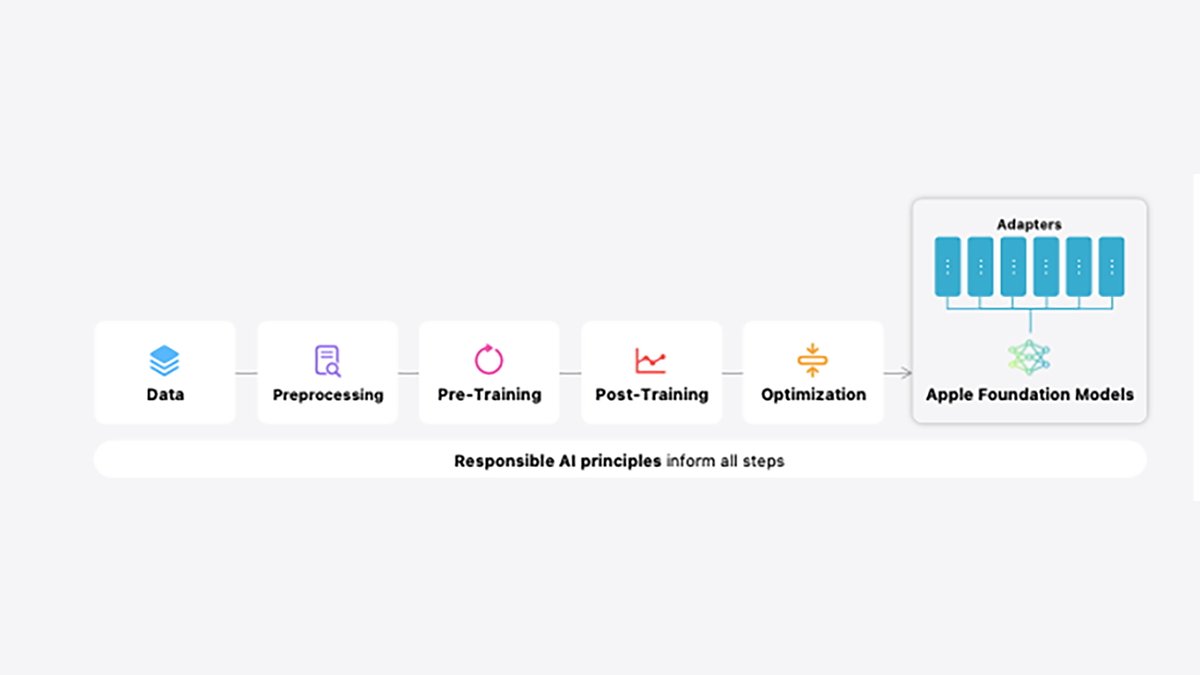

Apple provides a quite detailed forty-seven page white paper on how its foundation language models work. From a technical standpoint, Apple’s foundation models use a baseline of AI techniques, which include:

- Transformer architecture

- IO Embedding Matrix

- Pre-normalization

- Query-key normalization

- Grouped-Query attention

- SwiGLU activation

- RoPE positional embeddings

- Fine tuning

- Human adjustments and input

Apple Foundation Models.

Apple Intelligence also uses an automated web crawler called AppleBot. Sites can inform AppleBot not to use their content by opting out in their robots.txt files.

For code AI, Apple Intelligence also learns from open-source software hosted on GitHub, which it learns from and condenses, removing duplicate cases automatically.

The Apple white paper describes how the models work and the training methods used in detail, including some advanced math at the end.

Private Cloud Compute

Apple Private Cloud Compute (PCC) is a remote AI service that utilizes all of the above models, plus has access to additional models for expanded intelligence.

According to this blog post which describes PCC, Apple has several goals with PCC, which include speed, accuracy, privacy, and site reliability.

PCC also uses the same Secure Enclave and Secure Boot as Apple consumer devices to ensure the operating system and data can’t be tampered with.

Like many other AI offerings from tech companies, PCC provides remote execution of AI prompts, but with faster performance.

Apple summarizes its foundation models with:

“Our models have been created with the purpose of helping users do everyday activities across their Apple products, and developed responsibly at every stage and guided by Apple’s core values. We look forward to sharing more information soon on our broader family of generative models, including language, diffusion, and coding models.”

Also, see our articles iOS 17.6 & more arrives in wake of Apple Intelligence beta release and Apple admits to using Google Tensor hardware to train Apple Intelligence.

Apple Intelligence promises to provide iOS and Mac users with faster, optimized AI on devices and in the cloud. We’ll have to wait and see how it plays out with the imminent release of iOS 18 and the next iteration of macOS.