Apple Intelligence is the product of more than a year’s worth of tireless testing. Here’s what Apple engineers used to ensure the quality of their AI software.

For Apple, 2024 was undoubtedly the year of artificial intelligence. The company has long been working on machine learning features, with its most recent operating systems ushering in an entirely new set of AI-powered enhancements. They are known collectively under the moniker of Apple Intelligence.

While the generative AI tools themselves were announced in June, at WWDC 2024, only a handful of them made their public debut with the first developer betas of iOS 18.1 and macOS 15.1. Since then, Apple has rolled out more and more of the AI-powered enhancements with subsequent beta releases.

At the time of writing, the iOS 18.1 and macOS 15.1 updates are nearing the end of beta testing, while the first developer beta of iOS 18.2 has only just arrived. Months after the big announcement, some Apple Intelligence features are still only available on beta versions of Apple’s operating systems.

According to people who spoke with AppleInsider and accurately revealed many Apple Intelligence features months ahead of launch, the company spent a year working on its in-house generative AI tools before they were finally released to the general public.

During development, Apple tried to keep the full scale of its AI endeavors a secret. Individual AI projects received their own codenames, as was the case with the email categorization feature, known as Project BlackPearl.

Apple Intelligence as a whole, however, was known by the codename Greymatter — an unmistakable reference to a type of tissue found in the human brain. Some of Apple’s internal test applications also had names that concealed their overall purpose.

During the development of Apple Intelligence, Apple used at least two dedicated test applications and environments to test its AI software.

Apple used multiple test applications during the development of iOS 18 and macOS Sequoia.

The two apps in question are known as 1UP, a reference to the ever-popular Super Mario series by Nintendo, and Smart Replies Tester. The name of the latter is self-explanatory, given that AI-powered Smart Replies have since made their way into release versions of Apple’s operating systems, in the Mail and Messages applications.

We were told that internal distributions of iOS 18.0 and macOS 15.0 Sequoia featured many of the underlying Apple Intelligence frameworks used in the publicly available betas of iOS 18.1 and macOS 15.1.

The frameworks were necessary for testing and were included alongside the standard development and configuration utilities found in Apple’s internal-use operating systems.

Different AI-related features could be toggled through feature flags, with the use of the Livability application. 1UP and Smart Replies Tester, the two known AI applications, were used by Apple’s engineers to test the different aspects and use cases of Apple Intelligence.

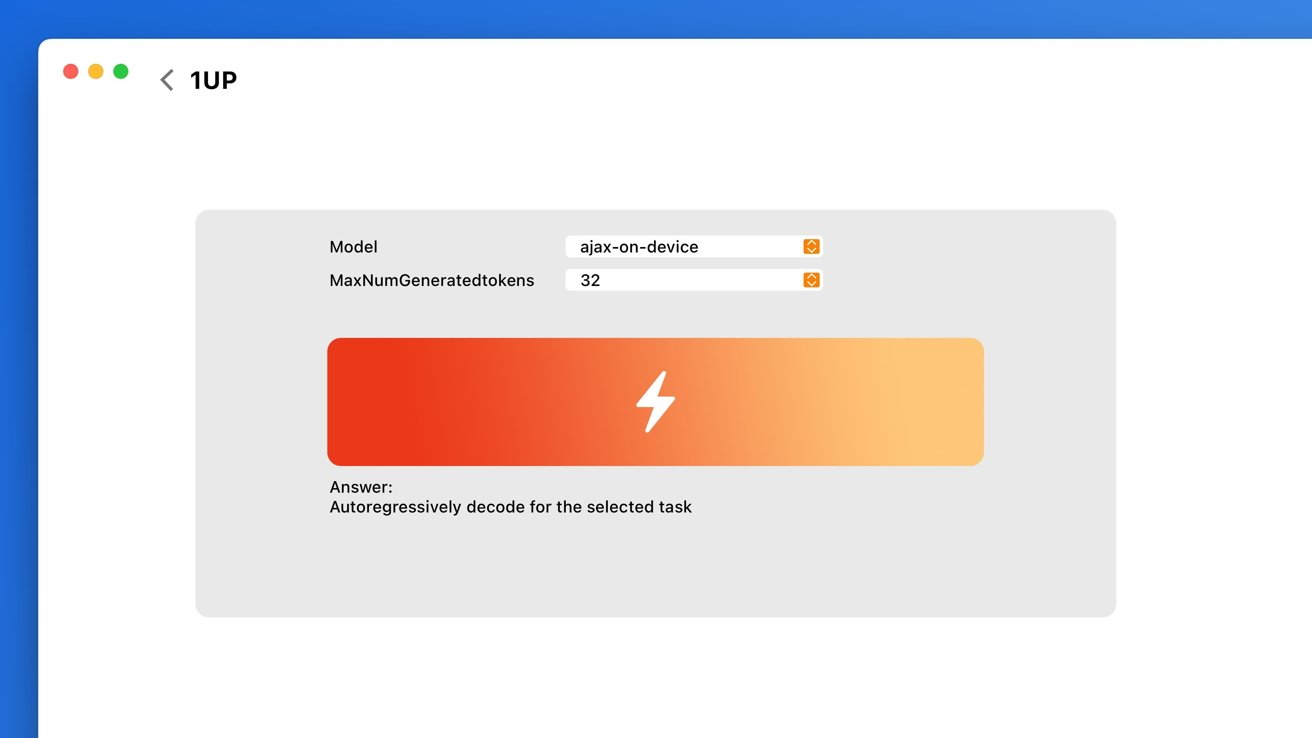

1UP — Text-generation testing with AI models

Found even in the earliest internal-use builds of iOS 18 and macOS Sequoia, the 1UP application was used for testing text-related generative AI features. The application itself featured a variety of different test options and parameters, which could be adjusted as needed.

The 1UP app featured tests related to text-generation, and references to the Ajax large language model.

People familiar with the application have told AppleInsider that it contains direct references to Apple’s long-rumored in-house LLM or large language model, known as Ajax, which can function on-device.

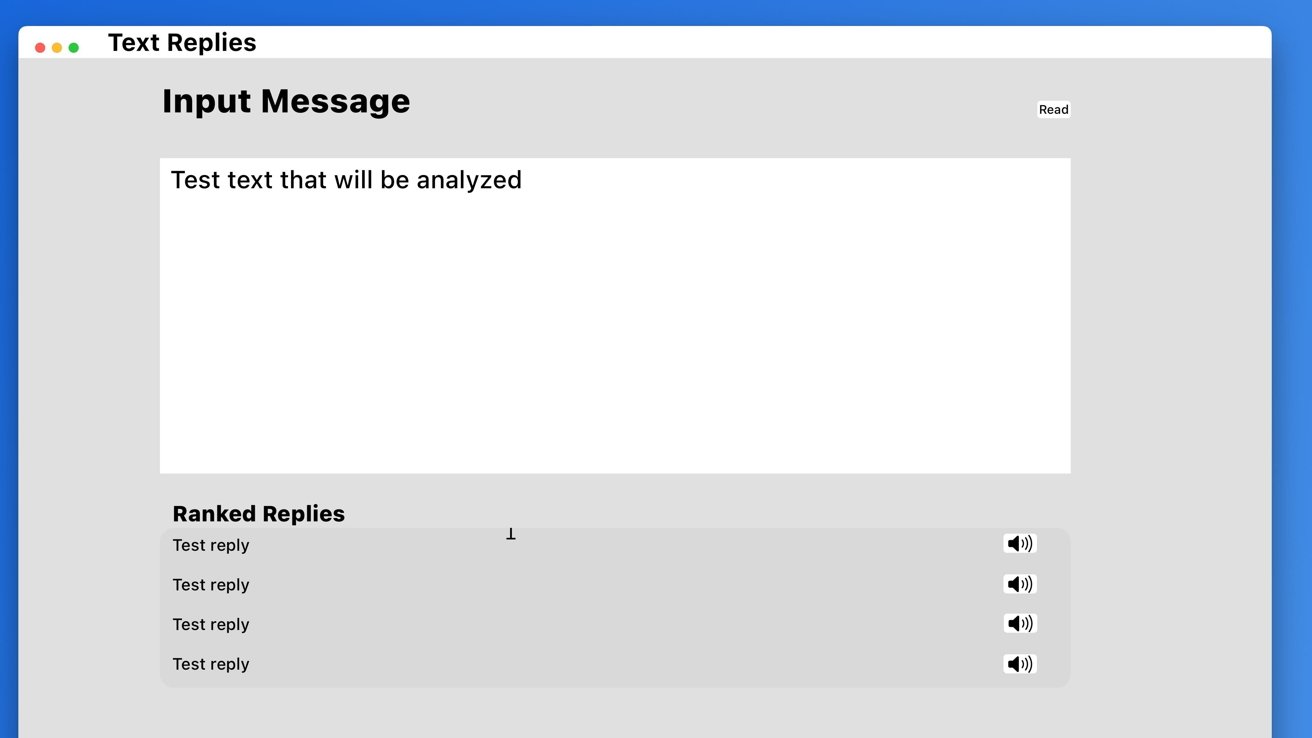

The 1UP app features multiple test options, organized into different sections. One of the tests involves text generation. This part of the app was used to test “autoregressive text generation from a prompt,” people familiar with the app told us.

It allowed its users to choose between different AI models, including the aforementioned on-device Ajax LLM. The application also featured a setting to adjust the maximum number of generated tokens, which could be set anywhere from 30 to 100, the default being 48.

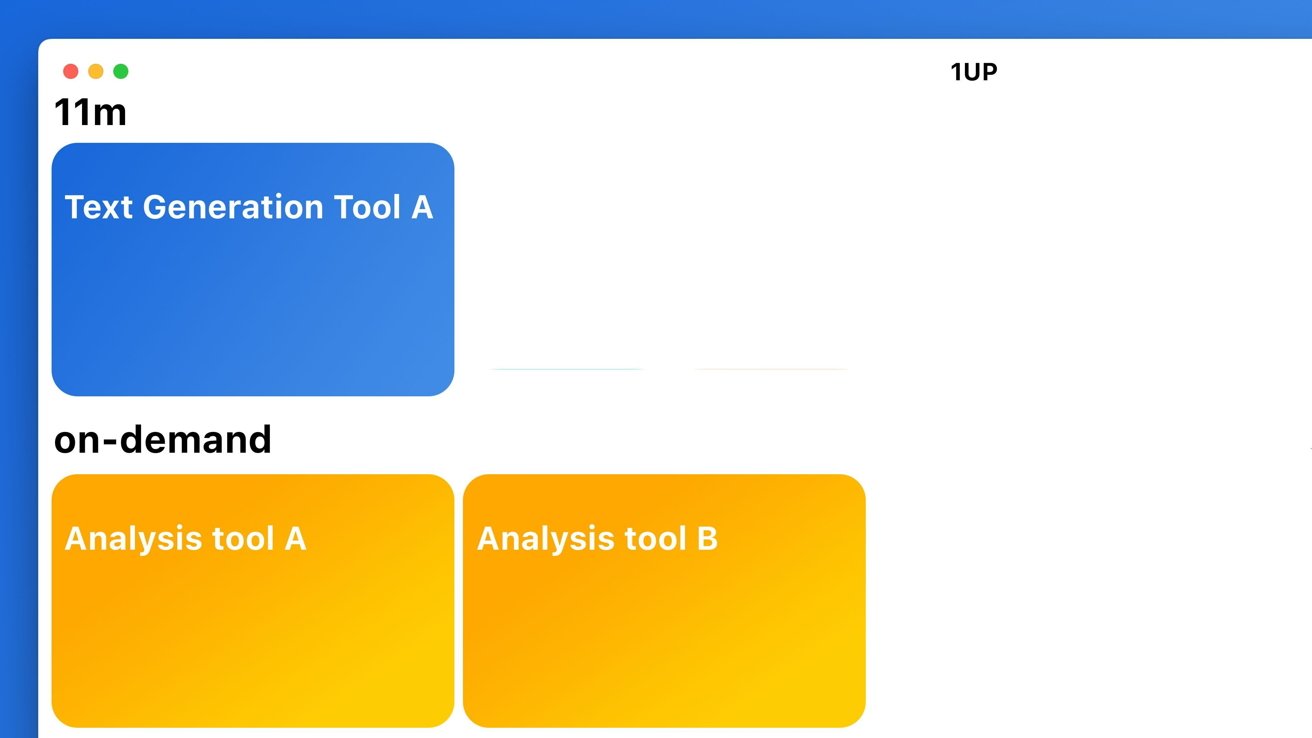

1UP — Document analysis, topic analysis, and text understanding

Based on what we were told, it’s apparent that Apple placed significant focus on AI’s document and file understanding. Some tests found within the 1UP app were focused on document and text analysis. Whether the user input consisted of raw text, a PDF, or a Word document, Apple’s software was supposed to identify key information within the text, such as phone numbers, addresses, languages, and text author, if applicable.

A mockup of the 1UP user interface, based on the information provided to us by people familiar with the app.

Web history from Safari and conversations from Messages could also be analyzed for keywords, or “topics,” as they were known within the app. This could include words that repeat often or those that appear to be the focal point of a text. Apple-specific terms are also recognized, and key sentences are isolated.

The app was also capable of cross-referencing the information found in a text or document with the user’s information. For instance, whether or not a phone number was saved in the user’s Contacts, or if an event was found in the Calendar.

The significance of the 1UP tests, and the clues about Apple Intelligence

The 1UP tests offered hints as to what would eventually become Apple Intelligence features, such as the upgraded Siri with personal context, and Writing Tools. With Apple Intelligence, it’s possible to edit texts and generate text-based summaries of the user’s conversations, where key details such as names, dates, and locations are highlighted.

Apple’s own AI prompts also revealed that the company explored multiple levels of summarization, including summaries consisting of only 10 or 20 words. AppleInsider paraphrased many of these prompts before they were ever made public.

The tests within the 1UP are indicative of what Apple wanted to do with Safari as well, which was to have its AI use the information from web pages the user visits. This idea eventually led to the Intelligent Search feature, now known as Highlights.

Text generation and document analysis are now handled by ChatGPT rather than Apple’s AI

With the first developer beta of iOS 18.2, Apple notably improved Siri through integration with OpenAI’s ChatGPT. Requests and queries that Siri is unable to process are handed over to ChatGPT, albeit only with direct user approval.

Tests in the 1UP app seem to mirror the functionality made possible by ChatGPT integration in iOS 18.2.

iOS 18.2 also introduces a new splash screen outlining some of the key features made possible via ChatGPT integration, such as text generation in Writing Tools and document analysis.

The 1UP app features tests for virtually the same things, indicating that Apple had perhaps wanted to accomplish ChatGPT-like features independently, through its own AI models.

Along with the 1UP app, Apple used another internal application known as Smart Replies Tester.

Smart Replies Tester — evaluating AI-generated responses

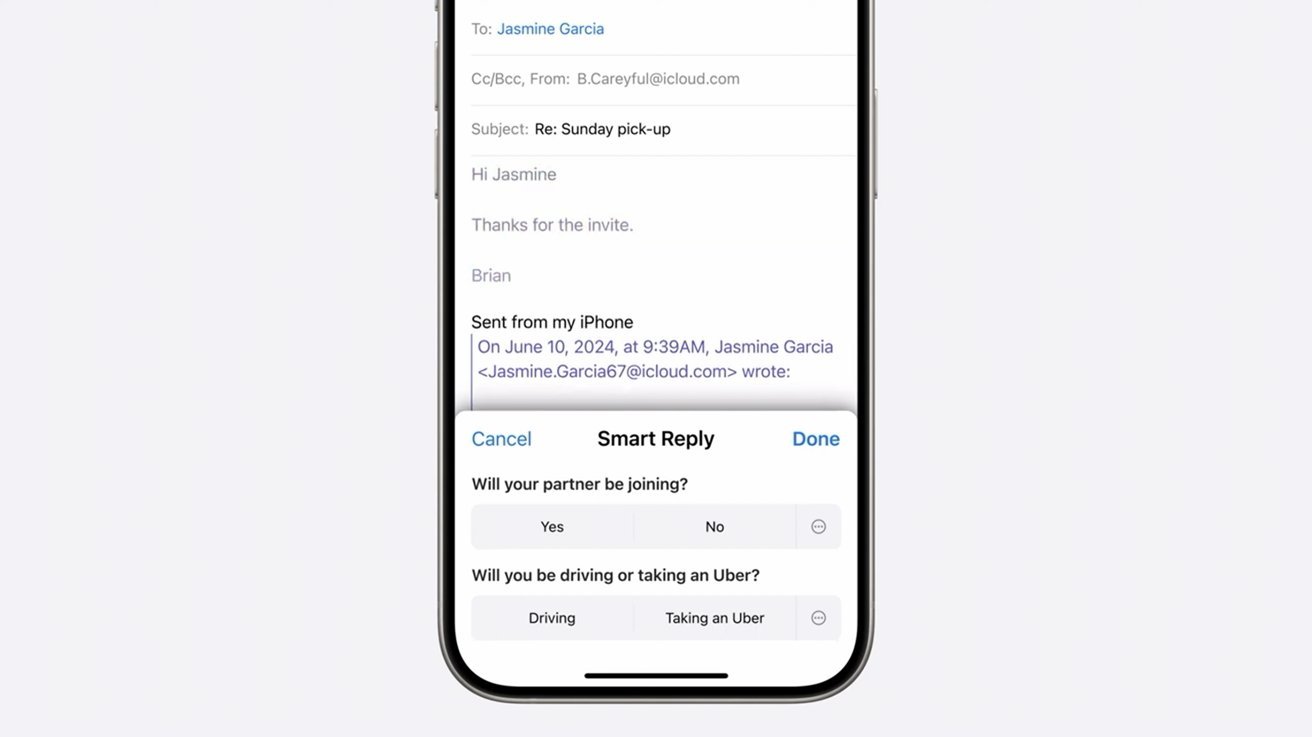

With iOS 18.1, Apple introduced AI-assisted Smart Replies, which are available in Mail and Messages. This feature makes it significantly easier to draft a response to an email or message within Apple’s built-in apps.

Internally, Apple used a dedicated app to test Smart Replies. It instantly generated multiple replies based on the input text.

On an iPhone, Smart Replies appear as response suggestions above the keyboard in Mail. Apple Intelligence can generate responses to direct questions the user may be replying to, but it is often less useful in other situations.

Smart Replies Tester was seemingly built to test just that, how well Apple’s AI can generate a response, and how quickly. The app measured the response generation time in milliseconds.

According to people familiar with the matter, the internal application consists of multiple test menus where users can input text, and instantly receive several AI-generated Smart Replies. This occurs entirely on-device, and the responses change as soon as the input text is altered in any way.

Smart Replies can be found in the Mail app on iOS 18.2.

The app also can be used with an image captioning model, which is downloaded separately. Mass image captioning was possible as well. As for similar features in the iOS 18.1 beta, the Photos application now contains a greatly improved search functionality, which lets people locate images containing specific objects or locations with relative ease.

While Smart Replies Tester is distinctly AI-related, other internal applications also offer insight into Apple’s approach and way of thinking in regard to artificial intelligence.

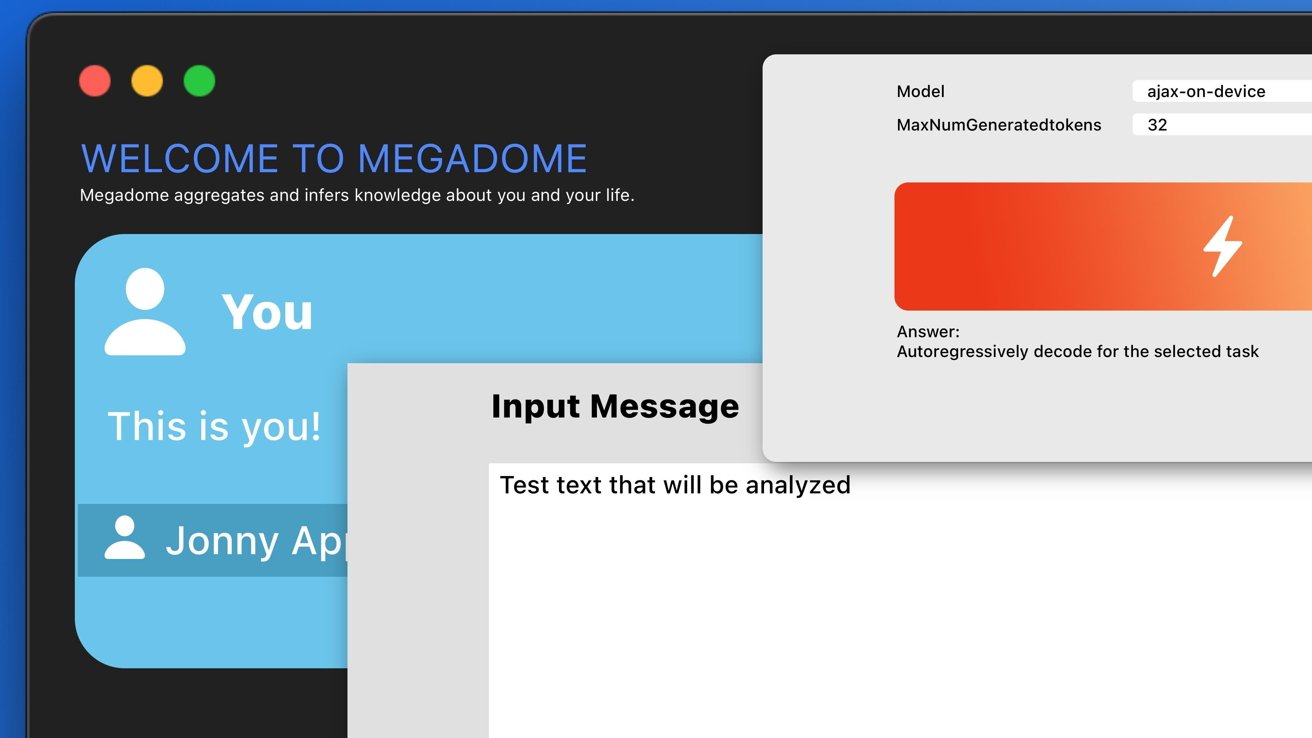

Megadome — Your personal context, all in one app

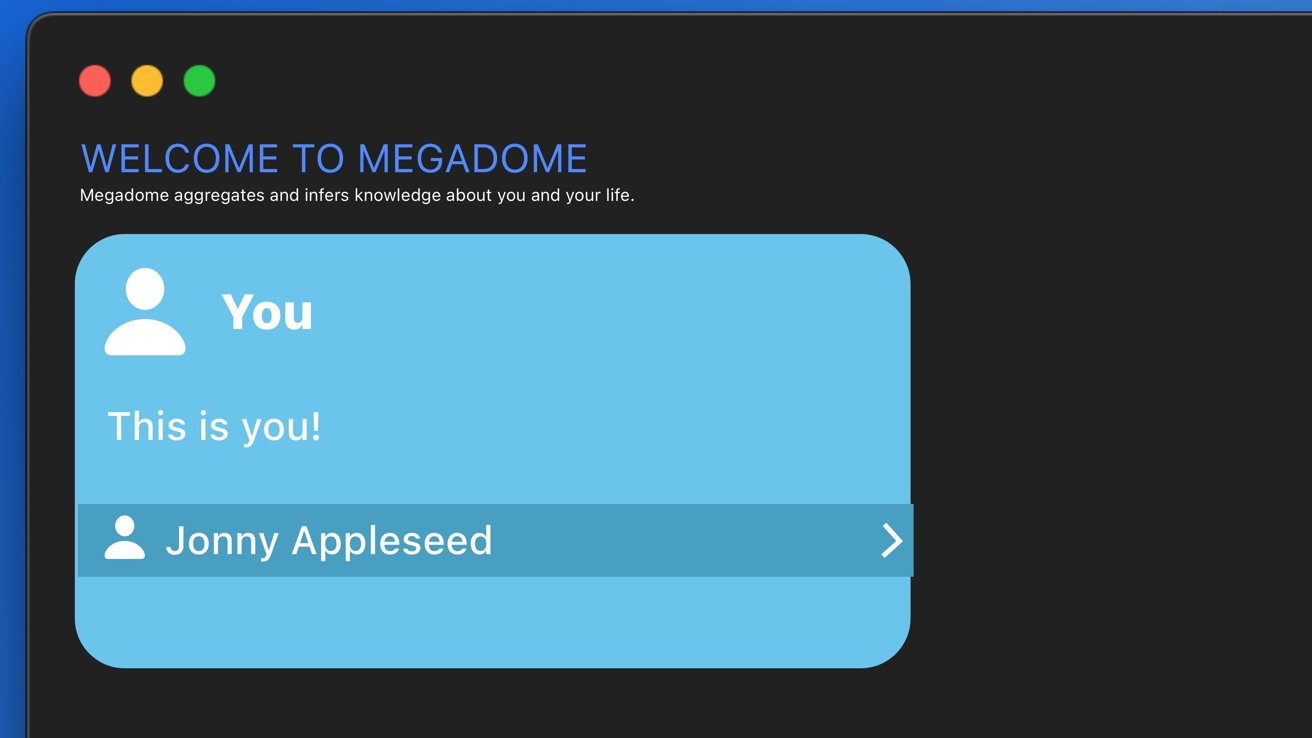

Another of Apple’s internal apps, Megadome serves as the perfect visual aid for Siri’s upcoming personal context feature, powered by Apple Intelligence.

The Megadome app aggregates user data and organizes it into different categories.

According to people familiar with the matter, the Megadome application can gather relevant user information, sort it into categories, and present it in the form of neatly organized cards.

The app can display the most important details about its user, including their full name, significant locations, relationships, groups, contact information, organizations, installed software, and much more. Megadome seemingly gathers this information from system applications the user has interacted with.

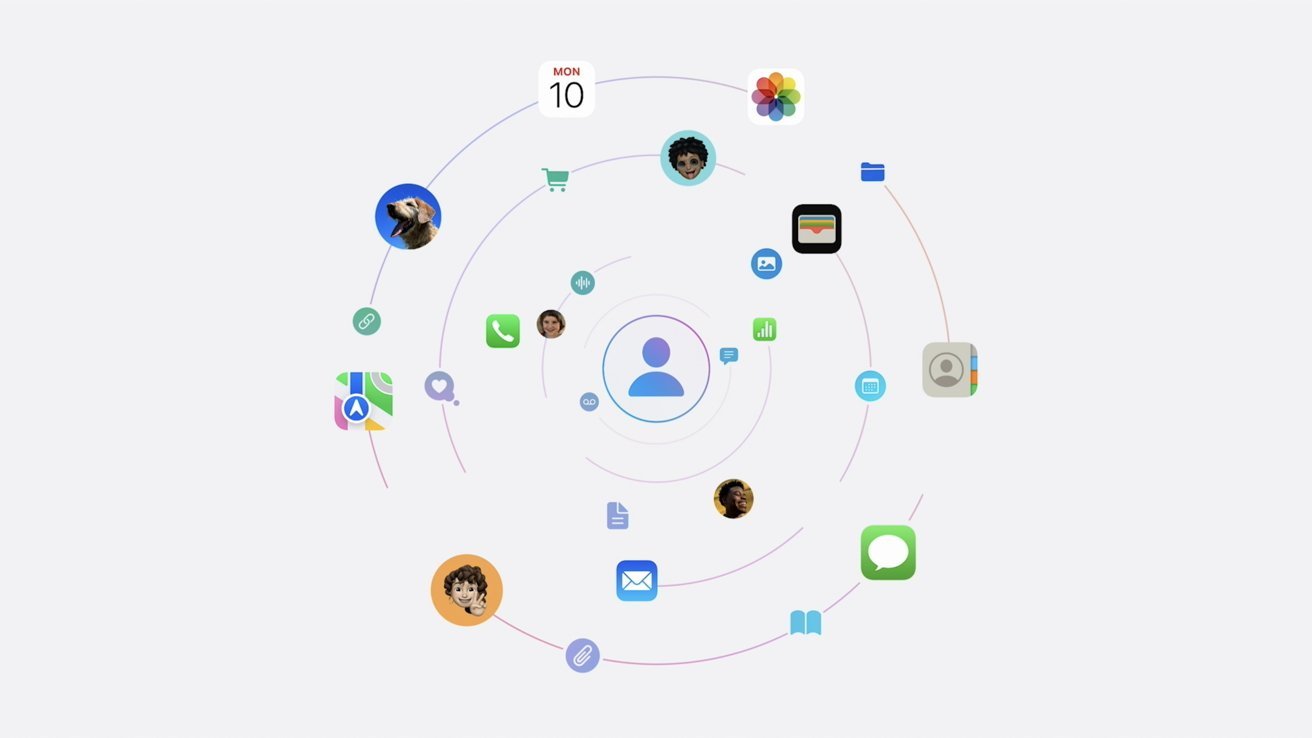

This information can also be viewed in the form of a so-called “Reality Graph,” which visualizes the relationship between entities and locations in the form of a diagram.

Why Apple made Megadome, and the features it mirrors

While the idea of an app that knows everything about you might seem nightmarish at first glance, the app is merely an internal-use tool, not something made for the general public. Its existence ultimately makes sense when things are taken into context.

Apple’s Megadome app offers some insights about the company’s thought process.

With Apple Intelligence, Siri will gain the ability to process natural language. The virtual assistant will also have a firm grasp of the user’s so-called personal context, as a result of the AI upgrade.

This means that Siri will be able to understand facts about the user’s life — the different people and places important to them. In many ways, the Megadome app is an embodiment of this idea. Apple wanted to build a tool that could understand the important aspects of someone’s life, and use these details to help the user.

What does this mean for the future of Apple Intelligence?

Apple’s internal applications often serve as an accurate indicator of things to come in the near future. Although they may feature various puns, memes, and obscure inside jokes, the company’s test apps reveal quite a lot about in-development features.

-xl.jpg)

Apple’s test applications often contain tidbits about upcoming features, such as the ones powered by Apple Intelligence.

While names such as 1UP, GreyParrot, and Megadome don’t mean anything to the average user, almost everyone has used the Calculator or tested Apple Intelligence in one form or another.

This phenomenon is hardly anything new. Even back in 2020, the internal-use app known as Gobi painted a pretty good picture of what would eventually become App Clips. Should any information about future test apps come to light, we will most likely be able to infer something about an upcoming feature.

In the meantime, the iOS 18.2 update introduces a series of long-awaited Apple Intelligence features. Image Playground and Visual Intelligence are among the key upgrades found in iOS 18.2.