Apple Intelligence is coming this fall

At WWDC, Apple introduced Apple Intelligence as an expansion of its AI capabilities. It’s incredibly impressive in action, and if you let it, it will change how you work.

The span and ambition of Apple Intelligence are staggering now, and it’s not even in the betas yet. This project that has been years in the making can help you search video content, answer personalized questions, and create any image you can image.

The keynote at the annual developer conference spent nearly half of the run time of the presentation on these features. After that, we also got to see it firsthand.

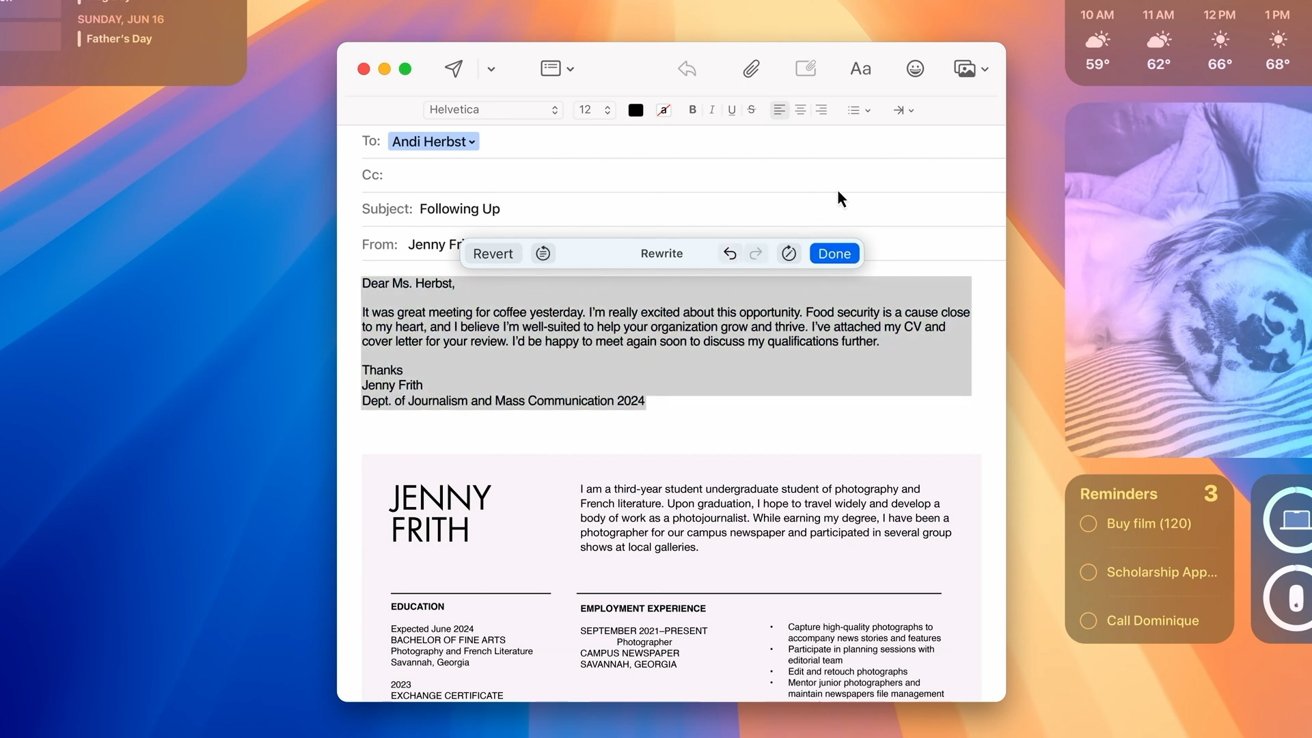

As one who needs to bash out words for part of my job, the writing tools of Apple Intelligence stood out. They work anywhere there is a text field.

Select some text and just as you’d see the cut/copy/paste buttons, there will be Apple Intelligence writing tools.

You’ll find it in a blogging app, an email, messages, or in the browser. Apple Intelligence will proofread your work, rewrite it, or adjust the tone.

Apple Intelligence can help give you confidence in your writing

When proofreading, it will highlight each change. You can step through them individually and learn what changed, or you can accept them all at once.

If you rewrite the text, you can choose a style that gives you a different take on what you had. For example, if you take a nasty email to a neighbor, you can resculpt it with Apple Intelligence it to be more professional, more neutral, and the like.

In our trial, Apple Intelligence took an internship application email and swapped out the intro, exclamation points, and rephrased some questionable sentences to make it sound far more professional than what was originally written.

In our demo, it looked just as good as it did in Apple’s keynote. We’ll be comparing it against Grammarly and Microsoft Word’s grammar and spelling checkers in the future.

Working with images via Apple Intelligence

There are three primary ways that Apple Intelligence works with images. It can make Genmoji characters, it can create unique images based on a description, and it can edit your photos.

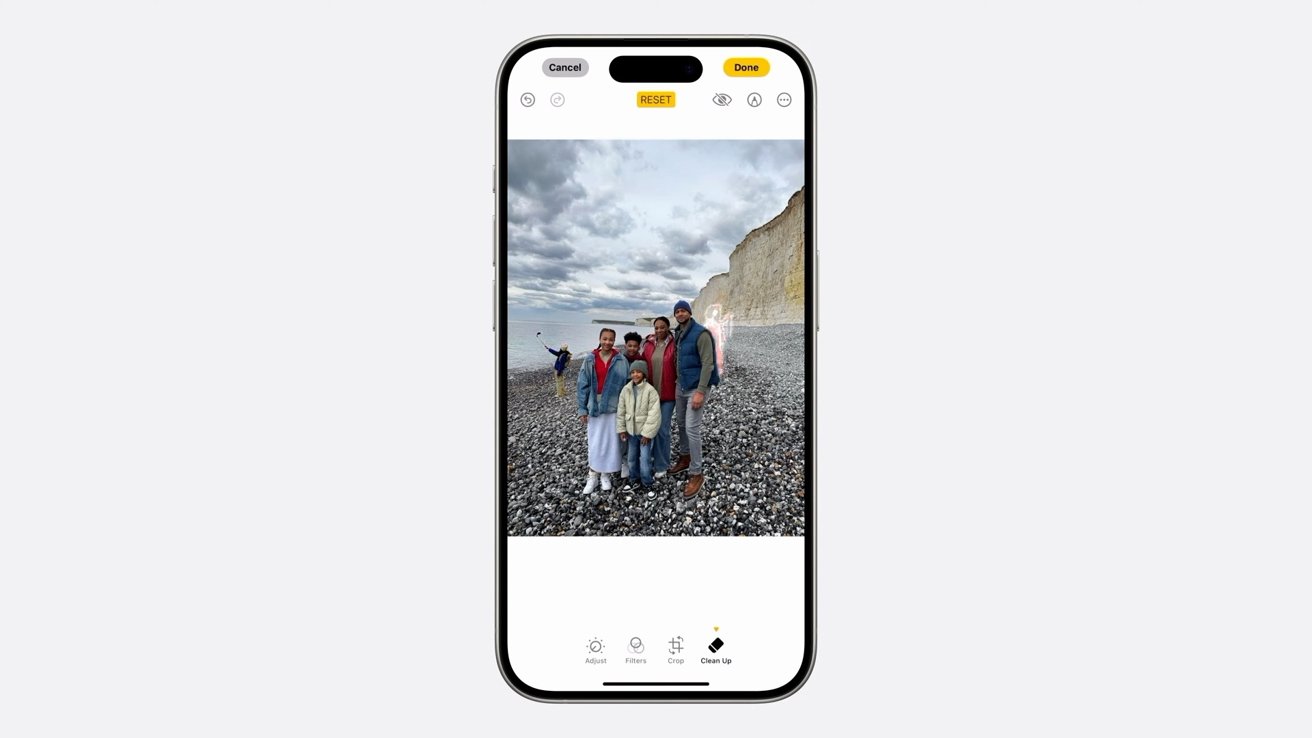

Automatically editing your photos is long overdue, with Google and Samsung touting AI abilities to manipulate your shots. Now, Apple is taking its first steps into the arena.

Apple’s goal is still clearly to maintain the original intent of the photo. So you’re not going to be adding in people, opening someone’s eyes, turning a crying baby into a smiling one, or anything like that.

Apple Intelligence clean up tools helps remove distractions

You can choose a photo, and it will scan the photo automatically, looking for any distractions. There’s another great-looking animation as it processes the image and highlights these distractions.

Just tap any of them, and they’ll be removed, filling in the pixels in their absence. Apple put a lot of effort into retaining textures, finding the edges of hair, and other nuances to make edited images in Photos look as natural as possible.

Most distractions will be bigger objects like people walking in the background, a bird that flew into the frame, and other photo-bombers. Blemishes, can also be potentially removed.

It will depend on various circumstances that we’ll be sure to test on our own when we can. But, since you can select distractions manually, something like a red dot on your face can likely be removed.

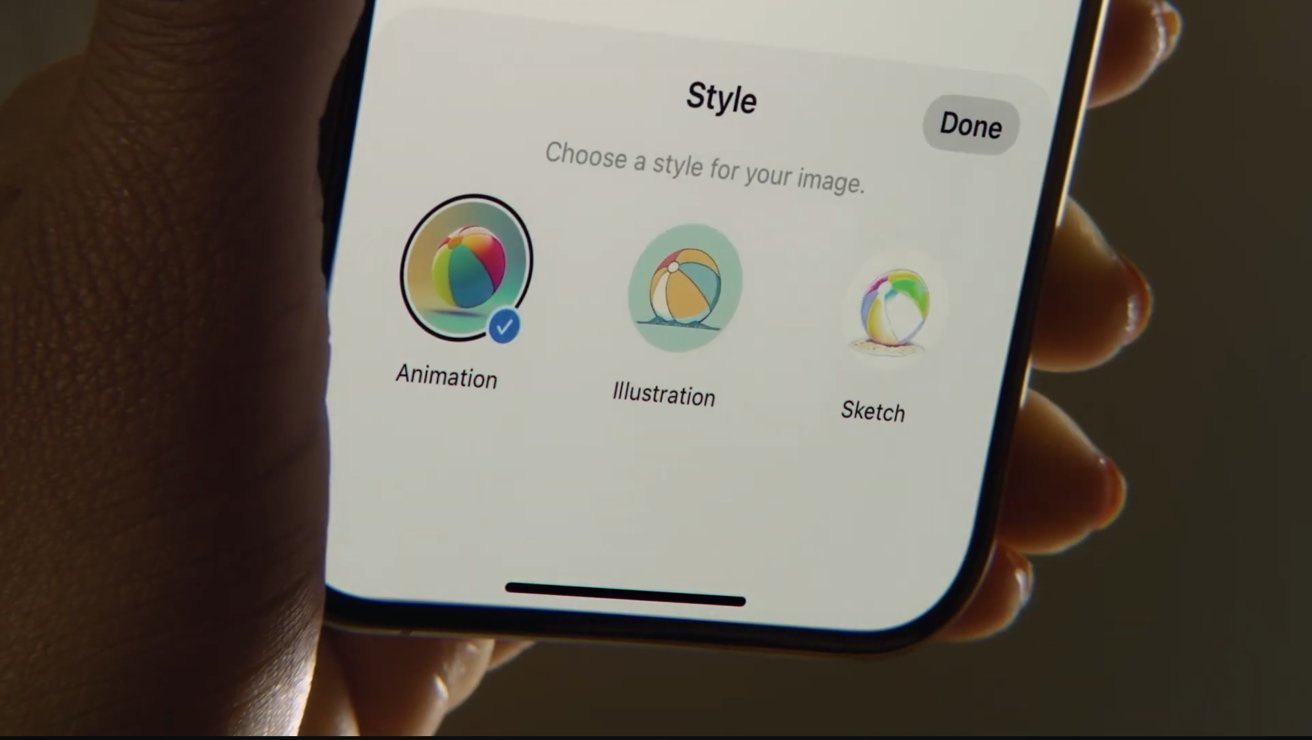

Generating entirely new images is a different beast. Apple even created a dedicated app — Image Playground — to create these images.

Generate images in three distinct styles

A picture can be created in three styles — sketch, animation, and illustration. Notably, photo realistic isn’t amongst the options.

Apple trained their generative models on publicly available art, licensed art, and commissioned art to specifically match what it was going for.

When an image is generated, it performs multiple checks for adult or copyrighted content. This happens when it is submitted, on the remote servers, and before it’s sent to the user.

Users can provide feedback, too, if something goes awry. Images generated are tagged as created with Image Playground in the EXIF data, as we’ve already discussed.

Image Playground lets you generate images

Our main takeaway after the time we had with Image Playground was just how well it worked. Images came back very fast, and when they did, users could quickly move between the different styles on the fly.

If you add more criteria to the image, it will iterate and evolve new ones. And if you remove any, it backpedals to the previous version it had created.

The whole thing felt fun, easy, and approachable. We expect that this will be the most obvious tool that people use and share results of, as Apple Intelligence rewritten text is far more difficult to spot.

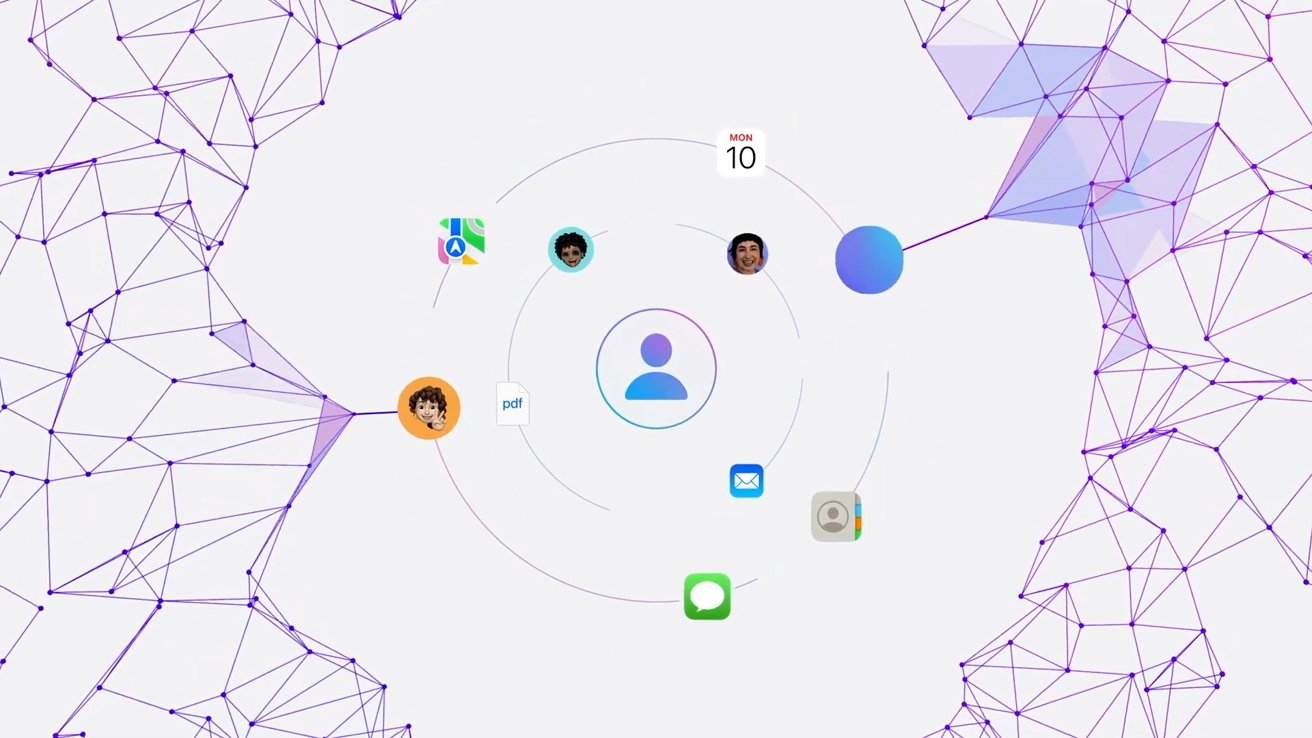

Apple Intelligence and Siri

Starting in iOS 18 and macOS Sequoia, Siri leverages Apple Intelligence to improve performance, accuracy, and what the voice assistant can do.

Arguably, this may be the most substantial portion of the Apple Intelligence repertoire. There are millions of queries done per-day by Apple customers now — with varying success.

Siri will be able to tap into your personal information, all locally on the device. This means that it can be far more aware of you and your needs.

It’s able to answer questions about your texts, your emails, photos, videos, schedule, and more. Importantly, this is something no other AI assistant is capable of doing with the same degree of privacy.

It starts with a new slick invocation animation.

Siri now animates from the edges with iOS 18

When invoked via the side button, Apple has added these subtle effects where it looks like you’re pressing into the screen. After a brief pause, an animation spreads around the edge of the display that’s colorful and lively.

It’s a step up from the glowing orb. And, it extends to the volume buttons too.

One big change is how natural it feels to talk to Siri. You can stumble across your words or even change your mind mid-query, and Siri will understand. Apple demonstrated this in the keynote.

Siri was asked to “Set an alarm for 7 AM, sorry, I actually meant 5:45.” Sure enough, an alarm was set for 5:45.

Context is also improved, with the assistant retaining awareness across multiple questions.

Siri was asked about the San Francisco Giants, and it understood who “they” were when asked “When do they play next” after the initial query. Siri even knew what to do when we ended by asking “add that to my schedule.”

These interactions within apps are using App Intents, something developers can utilize as well. They also give unique looks to each interaction.

Double-tap the bottom to type to Siri

Each interaction was brisk and fluid, even in this unreleased beta, which was particularly impressive.

Some of these interactions, though, will be limited, depending on the apps you use. For example, you may have these complex, context-aware conversations in some apps like Apple Home.

You still can’t yet say, “turn on the lights in the living room and open the shades 30 percent.” These things will come, though, and developers can do more by utilizing App Intents.

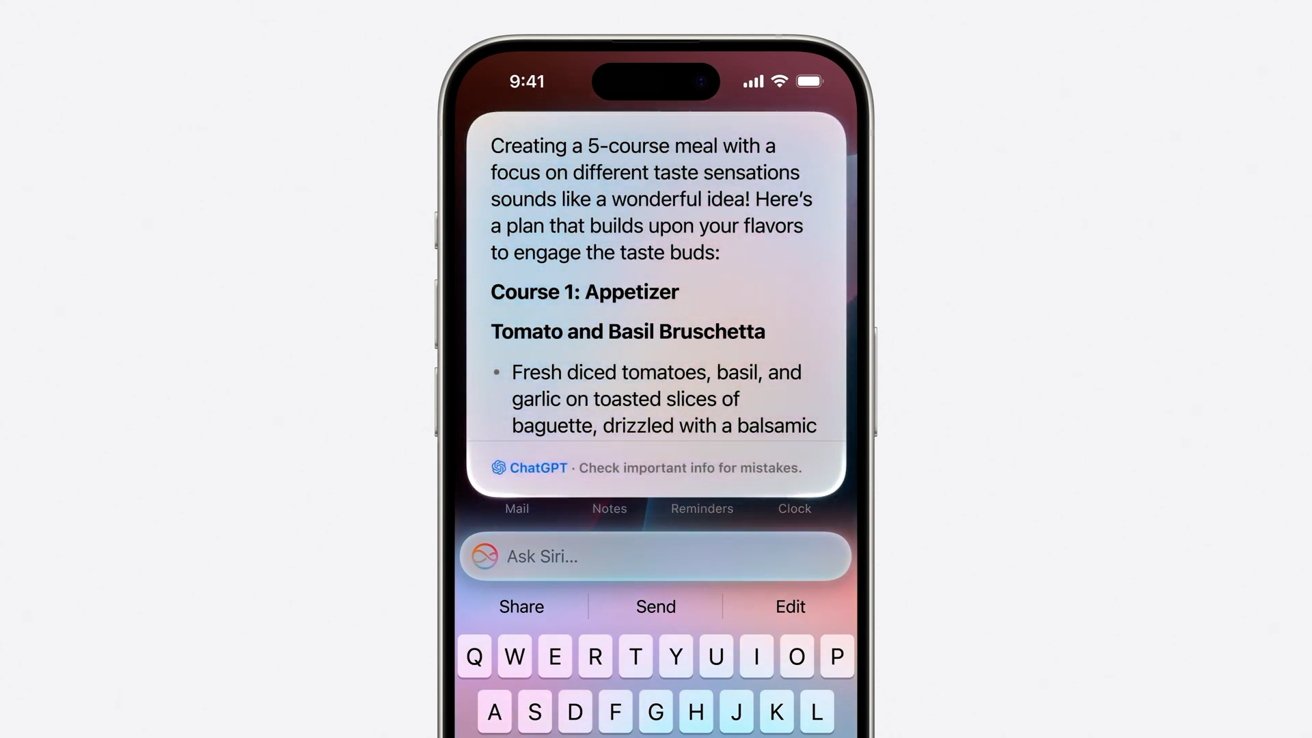

ChatGPT answers the hard questions

While Siri seems to have a great grasp on your personal knowledge, there are times when more info proves useful. That’s where ChatGPT’s optional integration comes into play.

How it works is you ask a question. Siri chews on it for a minute and suggests that ChatGPT may be better at helping.

It then explicitly asks you every time if you’d like the query passed to ChatGPT. Contrary to some fearmongering already going on, ChatGPT isn’t privy to every query you make.

If you approve the use of ChatGPT, and only if you approve it, Siri will then pass along limited info about the query. It includes photos if applicable, before returning your answer.

Siri can leverage ChatGPT to help answer questions

There are few important things to note about this. Users have ultimate control.

ChatGPT must be enabled in settings and will ask for permission each time it is used. A user’s IP is also obfuscated each time, so OpenAI can’t match you to past searches, which Apple has explicitly prohibited as per the OpenAI’s contract terms.

Finally, OpenAI is not allowed to train on these searches either.

However, this all changes if you log in with an OpenAI account. If you do, you’re bound by the policies you agreed to when creating that profile.

We’ll see in the fullness of time if the responses that ChatGPT change when logged into an OpenAI account. We’ll be testing that as the summer progresses.

A promising start, but not a universal one

We left our demo blown away by how impressive Apple Intelligence was. It permeates the entire operating system of macOS, iOS, and iPadOS, and will touch so many of your daily tasks.

Many of these touches we didn’t discuss here here. Mainly, the intelligent management of Mail, or sorting your notifications based on what’s important.

Apple Intelligence is only going to expand

Most of the AppleInsider staff are deeply interested in the new features. We’re expecting the features to be gated behind a wait list, though.

But, regardless of that wait list, we’re not going to get it on our HomePods in 2024. And, probably not on the existing devices in the family at all.

If you have a HomePod — or multiple — they won’t have the benefits of Apple Intelligence or the updated Siri. That extends to other devices, too, like your Apple Watch or any unsupported Macs or iPhones.

In the interim, you’ll want to invoke Siri from your iPhone manually so that your HomePod doesn’t take over. You’ll have to adjust your queries based on the device you’re talking to.

These are just growing pains, though. The future is bright, and there is much room for Apple Intelligence to expand.

Apple Intelligence will be launching with the beta of iOS 18 this summer before launching as a beta feature this fall. It works on all Macs with an Apple Silicon processor, and the iPhone 15 Pro and iPhone 15 Pro Max.