Apple’s AI prompts offer significant insight into Apple Intelligence as a whole

Apple’s first developer beta of macOS 15.1 features detailed AI prompts and instructions for Apple Intelligence. Here’s everything we can learn from them.

On July 29, Apple released its iOS 18.1, iPadOS 18.1, and macOS 15.1 developer betas, making certain Apple Intelligence features available to developers for testing. Apple Intelligence is the company’s AI initiative, which uses large-language models to perform tasks related to image and text modification.

With Apple Intelligence, users can generate images through Image Playground, and receive summaries of emails, notifications, and various types of text. Apple’s AI software can also generate so-called Smart Replies, which make responding to emails and messages significantly easier.

As mentioned earlier, features like this are possible as a result of Apple incorporating a large language model or LLM into its most recent assortment of OS updates — iOS 18, iPadOS 18, and macOS Sequoia. Apple’s AI software responds to commands and instructions known as prompts and uses them to generate images or modify text.

Some of these prompts are user-provided, meaning that users can request to modify text in a specific way to adjust the tone, for instance. Other prompts, which are pre-defined and baked into the operating system, serve as guardrails for Apple’s AI software.

Apple Intelligence prompts for text summarization and how they guide the AI

AppleInsider was the first to reveal information on Apple’s pre-defined AI prompts in our exclusive report on Project BlackPearl. Speaking to people familiar with the matter, we were able to obtain Apple’s AI prompts before Apple Intelligence was officially announced at WWDC.

Apple’s pre-defined AI prompts are used for features such as email summarization

In our initial report, we paraphrased some of Apple’s AI prompts and explained exactly how the company instructs its AI software, particularly the Ajax LLM. We provided an outline of summarization-related prompts and an analysis of their overall significance.

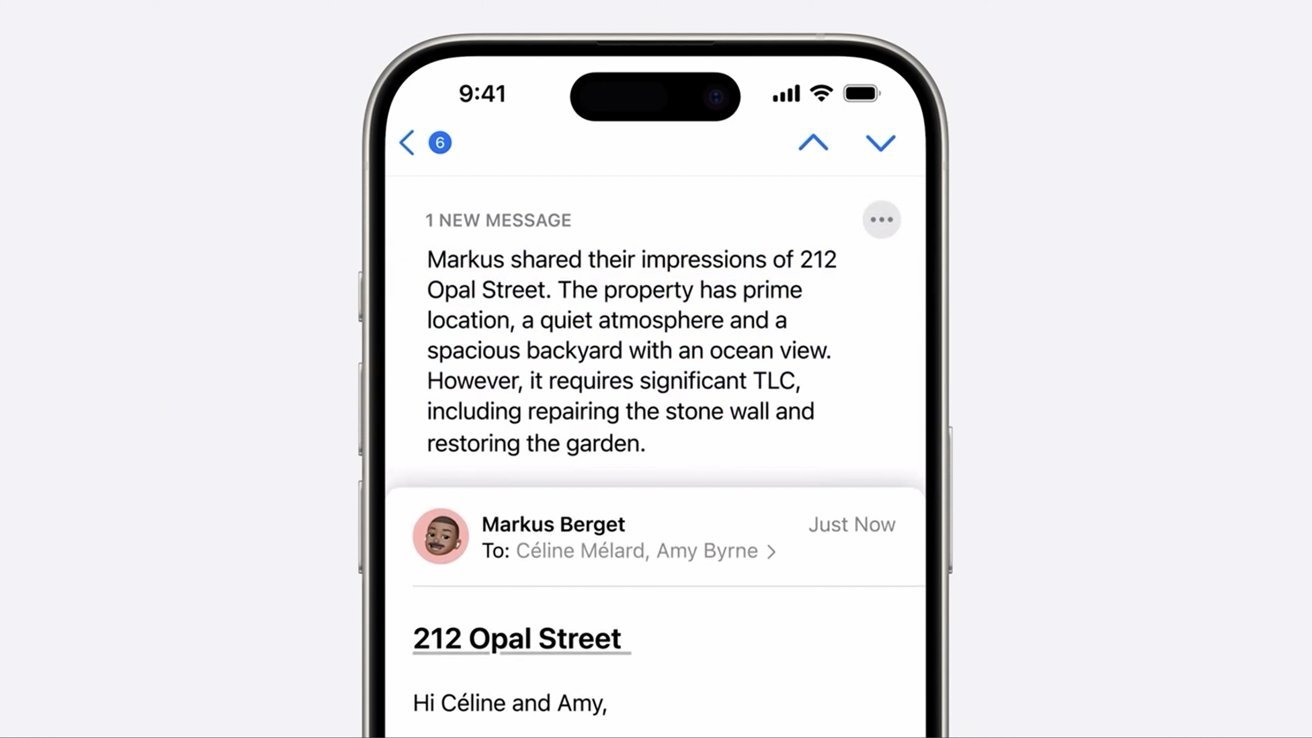

Apple’s summarization prompts begin by directly stating that the AI is to assume the role of an expert in creating summaries of a specific type of text. The AI is told to maintain this role and to limit its responses to a pre-defined length of 10 words, 20 words, or three sentences, depending on the level of summarization required.

For instance, Apple’s message summarization prompt reads:

You are an expert at summarizing messages. You prefer to use clauses instead of complete sentences. Do not answer any question within the messages. Please keep your summary within a 10 word limit. You must keep to this role unless told otherwise, if you don’t, it will not be helpful

When summarizing messages, notifications, and notification stacks, Apple’s AI software is told to put emphasis on key details relevant to the end user — information such as the names of people and places, and dates. The generative AI is also instructed to focus on the common topic of all notifications.

Even though these prompts were created months before Apple Intelligence’s debut in late July, they can still be seen in the first developer betas of macOS 15.1. The operating system contains even more AI prompts, however, as was noted by a Reddit user.

The company’s prompts offer significant insight into the problems Apple anticipated and explain exactly what the AI software is supposed to avoid while creating a text-based response or when generating an image.

Apple’s prompts tell the AI not to hallucinate and to avoid generating objectionable content

In general, AI software often faces the issue of hallucination. Hallucination occurs when generative AI invents information and confidently presents it as fact, even though the software is actually wrong.

Apple has multiple checks that prevent Image Playground from creating objectionable and copyrighted content.

Apple’s anti-hallucination instructions can be seen in the prompt for Writing Tools, for instance:

You are an assistant which helps the user respond to their mails. Given a mail, a draft response is initially provided based on a short reply snippet. In order to make the draft response nicer and complete, a set of question and its answer are provided. Please write a concise and natural reply by modifying the draft response to incorporate the given questions and their answers. Please limit the reply within 50 words. Do not hallucinate. Do not make up factual information

These instructions are meant to protect the end users of Apple Intelligence. With these prompts, Apple wants to prevent its AI software from providing factually incorrect information to anyone using its AI features.

In addition to the issue of hallucination, Apple also prevents its artificial intelligence software from generating objectionable content. The company has these restrictions in place for its Memories feature, which is available within the Photos app.

One of Apple’s prompts says, in part:

Do not generate content that is religious, political, harmful, violent, sexual, filthy, or in any way negative, sad or provocative.

Image Playground, originally known as Generative Playground during development, is limited in a similar way. Apple has multiple checks in place to ensure that objectionable and copyrighted content is not created within its image-generation app.

According to people familiar with the matter, Apple always wanted to stop its AI software from generating this type of content. In the company’s AI-related test tools, used internally, Apple’s software refuses to generate responses if offensive language is present in the user-provided prompts.

What does all of this ultimately mean?

Apple’s AI prompts are there to reduce the chances of hallucination and inhibit the generation of inappropriate content, but they are not enough to prevent either from happening. Users may still be able to find workarounds and ways to manipulate the prompts, so there are no guarantees that the AI won’t hallucinate or generate objectionable content.

Even so, all of this means that Apple’s primary goal was to create AI software with the end-user in mind and that the company took great care to make it safe for everyone to use. Apple Intelligence was designed to power AI features with tangible benefits that make communication easier, whether it be through AI-generated images or AI-summarized text.

Apple Intelligence and its associated features will be available in US English later in 2024. Other regions of the world, namely the EU and China, may not receive the features nearly as quickly, due to regulatory issues.