Apple Intelligence is available on newer iPhone models

The slow rollout of Apple Intelligence has begun, but not everything is available to everyone as of December 2024. This is where Apple’s generative AI push stands, and where it will go in the future.

The introduction of Apple Intelligence promised a lot of changes that will improve the lives of iPhone, iPad, and Mac users. The intelligent upgrade to the operating systems would bring in smart features that will let users perform tasks more efficiently, generate images, and even make Siri better.

Even then, only some of the features were made available, with Apple promising that others were on the way in future updates.

This is the current state of Apple Intelligence, what’s set to arrive soon, and what’s further away on the horizon.

Apple Intelligence now: Text-heavy, US-only

The first actual release to the public outside of developer betas occurred with iOS 18.1, iPadOS 18.1, and macOS Sequoia 15.1 on October 28, 2024. On that day, all three operating systems finally gained the initial wave of Apple Intelligence features.

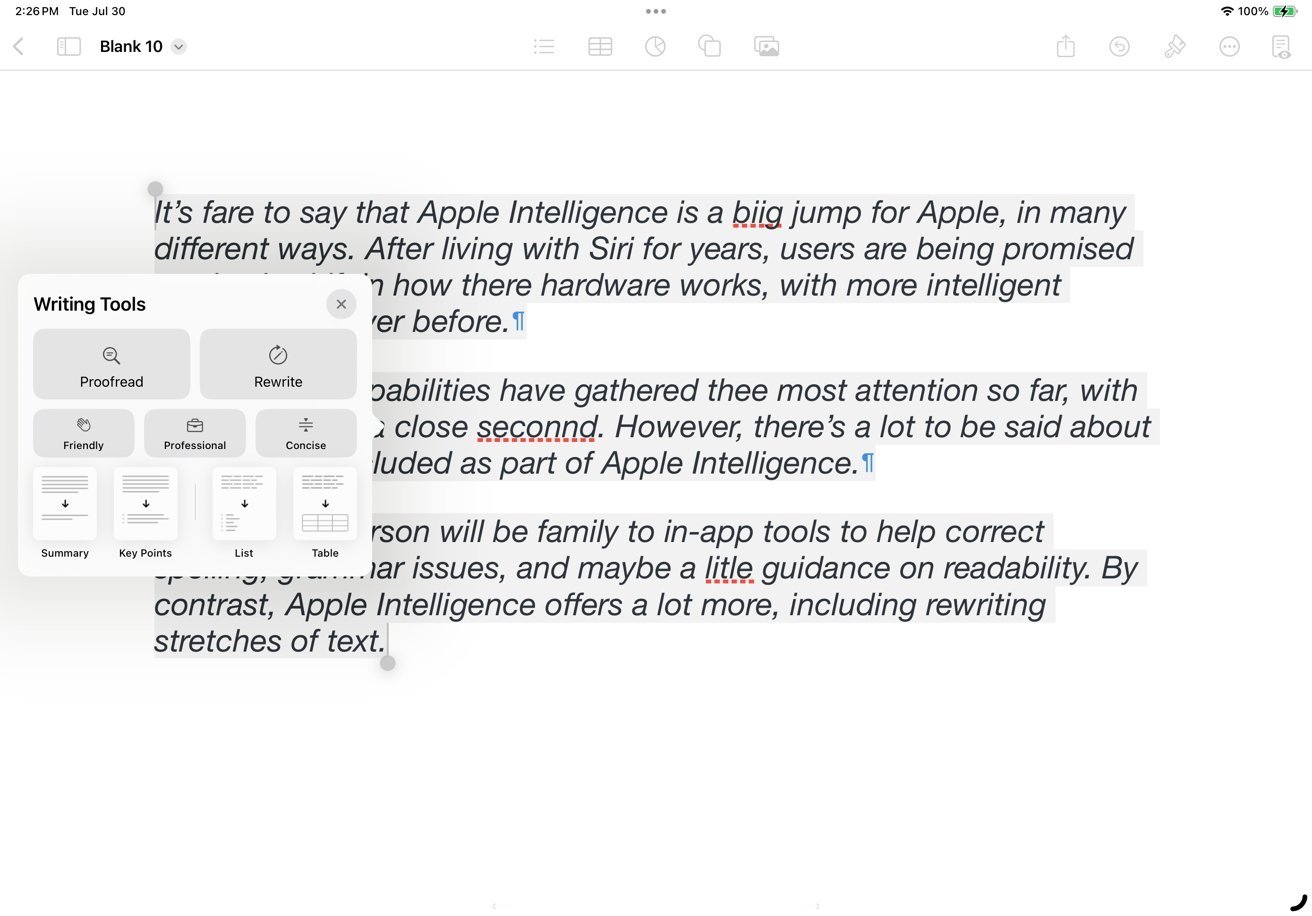

That small list was headed up by Writing Tools, a feature that could help users update the way they wrote. Unlike what you would find in a word processor, Writing Tools were accessible practically anywhere a user would write on their device, even in third-party apps and in a browser.

The main function of Writing Tools is to proofread stretches of text for grammatical and spelling errors, with issues highlighted to the user. This acted like a more powerful version of the spellchecker tool you would tend to find in word processors.

Apple Intelligence Writing Tools

There is also an option to let Apple Intelligence rewrite your text, doing so in a variety of different tones depending on how you wanted to convey your message.

Apple also introduced summarization within Writing Tools, generating a summary of the key points of the text.

Apple also started to make Photos more intelligent, with users able to search for images using natural language. For example, “Cat on a pillow looking at the camera.”

As well as making it easier to find specific photos, the update also helped users make the images perfect. A Clean Up tool would use generative AI to select unwanted elements from an image and to remove them, filling up the space intelligently with what would’ve been in the background.

Photos also benefited from the Memories feature, which generates movies by users typing a description. Photos would then select the best shots and videos based on the prompt, create a storyline with chapters using themes from the photographs, and set it to appropriate music.

Apple talked a big game when it came to Siri’s Apple Intelligence overhaul. However, only a few elements were provided initially.

Chiefly, users were able to have a more conversational relationship with Siri. When talking to Siri, users could change their request mid-speech or stumble over their words, and Siri had a pretty good chance of still understanding the final request.

Siri’s new animation

Siri was also loaded up with product knowledge, so users could ask it questions as a form of tech support. For example, you could ask it how to set up a new Focus, and you would be told how to do it.

Siri also benefited from a glow-up, with a new set of animations. When triggered, a multi-colored border would surround the screen on iOS and iPadOS, definitively alerting users that Siri is listening.

Apple’s rollout is slow because it’s being cautious and wants to get everything right. However, it was so slow that only people in the United States were able to use it.

Users with their devices set for other regions or languages other than American English were not able to use the new features at all. While US users were in a great position to use it straight away, everyone else still had to wait until Apple expanded its availability.

Apple Intelligence soon: Image Playground, Genmoji

While Apple Intelligence had a relatively unassuming initial release, the second wave stands to offer consumers a lot more. The more visual-based elements of Apple Intelligence are coming as part of iOS 18.2, iPadOS 18.2, and macOS 15.2, which is currently being beta-tested by developers ahead of a release in December.

Arguably the biggest visual feature for users is Image Playground, Apple’s app for generating images. Based on a prompt, Image Playground will create a set of images for the user, which can be saved and used in applications.

Image Playground

Users could add more to the prompt, including sets of pre-selected key words that can be quickly added. If there’s a photo of a person that the user wants to base the image from, they could use that, and Image Playground will do its best to create a caricature.

These images could be created in one of three styles: Animation, Illustration, or Sketch.

This isn’t just limited to the Image Playgrounds app, as it also works system-wide. For example, in Notes, Image Playground could generate an image based on key phrases in nearby text, rather than a user-supplied prompt.

A similar addition in concept to Image Playground, Genmoji allows users to create their own emoji-like images. Again, this is handled by a user-generated prompt, and is intended to make emoji that do not exist, or are customized in some way.

These customizations can, like Image Playground, be based on a person in your Photos library. This allows you to make emoji featuring that person, such as a mashup of a family member dancing.

These new emoji can be inserted into iMessage, just like other emoji.

A feature exclusive to the iPhone 16 generation Visual Intelligence is a way for users to ask questions based on what they can see. With a press of the new Camera Control button, users can point the iPhone at an object and location and get more information about it.

For example, a restaurant can bring up information about ratings and working hours. Or, on scanning a flyer for an event, a user could be asked if they want to add that to their Calendar.

Siri gets more of an improvement as part of the next update, in the way it handles responses. Namely that it can simply hand off the responses to ChatGPT.

Depending on the user’s query, Siri may offer for it to be shuttled over to ChatGPT for answering, rather than relying on Siri’s knowledge base. For example, asking for a creative story could be better answered using ChatGPT.

Wider availability and usability

While the initial wave of Apple Intelligence was only available in American English, Apple’s beta testing brought it to new English-speaking regions, including the UK, Canada, South Africa, and New Zealand.

Apple also added API access to Image Playground and Writing Tools, so third-party apps have better access to use and incorporate the features.

Apple Intelligence future: Personal Context and expansion

A lot of the announced Apple Intelligence features will be out and usable by iOS 18.2, but there’s still a few things on the docket that Apple will be bringing out in future updates.

Key is another Siri update, one that refers to something called Personal Context. This is a game-changing feature that relies on Siri understanding data from other apps.

By picking up key data points from your contacts, your messages, and other areas, Siri can answer much more complex queries than those possible by just using its knowledge graph.

For example, you could ask “What time is my mother’s flight landing?” Siri could use Contacts to determine your mother’s name, then search your emails for references to that name and flights, then using a flight number, look up when it will be arriving at the airport.

Apple also has to d a lot more app integration of Apple Intelligence, which will take time to implement.

Current expectations are that the iOS 18.3 beta round will be a bug fix release due early in 2025. Siri’s Personal Context and other integration changes should arrive by 18.4, around March or April.

As for support for other languages, Apple has already said that it is targeting an April 2025 release. This includes support for Chinese, French, German, Italian, Japanese, Korean, Portuguese, Spanish, Vietnamese, and others.