Apple Intelligence is finally here (sort of). With iOS 18.1, the first tranche set of AI features finally comes to our phones (albeit in beta and temporarily behind a waitlist).

The iPhone 16 line was sold as made for Apple Intelligence. It was Apple’s big push at WWDC in the summer, and fancy AI-powered features are front-and-center in all the phone marketing. Camera Control button? Even that is just an AI-powered feature waiting for its true purpose.

But the first set of Apple Intelligence features is kind of…feeble. I’ve been using iOS 18.1 throughout its extended beta test, and there are only two really good AI features, with the rest being a mix of annoyances and “use it a few times and forget about it” demonstrations.

Apple Intelligence: The good

There are two new AI features that I use daily and I think most people will make a part of their everyday iPhone use: Notification summaries and the Clean Up tool.

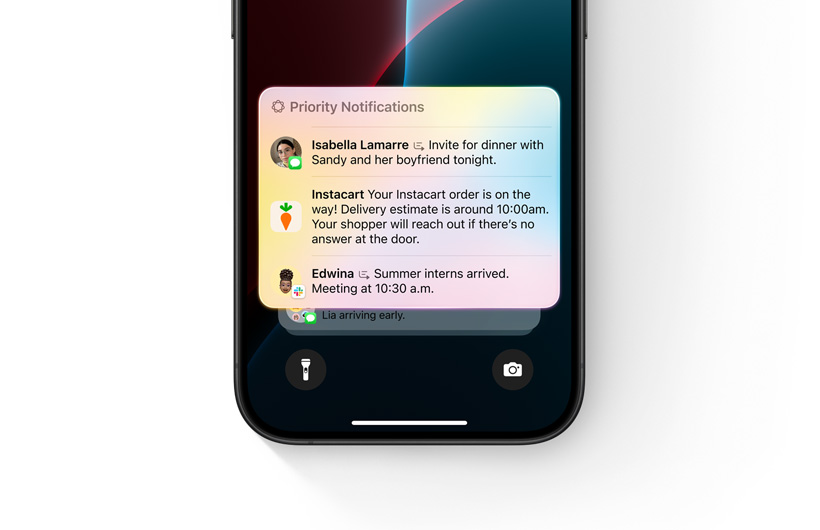

The new notification summaries capability can take almost any notification with more than a couple of lines of text and shorten it to fit in the notification. Text messages, social media posts, news alerts, email alerts… It turns out, most notifications are basically useless. They let you know something happened, but I’ve become so accustomed to not really seeing the information I need that I just tap the notification and open the app to see what it says.

With notification summaries, I get an idea of what an alert is actually about, and it lets me know which notifications actually need my attention and which ones can wait. It’s brilliant and fully intuitive. There’s no learning curve; your notifications just instantly become much more useful.

The Clean Up tool in Photos, while not perfect, is a fun, fast, and easy way to play around with some light photo editing and can quickly improve a lot of impromptu shots. Unfortunately, Apple sort of hides it. You have to open a photo, tap the Edit button (which looks like a set of levers and is not entirely intuitive), and then press the Clean Up button. This is the kind of helpful feature that really deserves a top-level interface button!

Notification summaries are one of those “didn’t know I needed it until I had it” features.

Notification summaries are one of those “didn’t know I needed it until I had it” features.

Apple

Apple Intelligence: The bad

The suggested replies in Messages and Mail are routinely terrible. I can’t remember the last time I actually opted to use one, and their glowing presence has become a total nuisance I wish I could easily disable.

The new Siri interface feels like a bad idea, too. I really love the new edge-lit glow and the way it washes over the screen (from the bottom if you say “Hey Siri” and from the right side if you use the side button). But this new look is selling a new Siri that just doesn’t exist.

Siri is better about gracefully handling your “um”s and “ah”s and mid-sentence corrections, but it’s just as dumb as ever about answering requests. The real new Siri isn’t coming until early next year when they roll out the personal contextual awareness, screen awareness, and overhauled App Intent system to work within apps.

I get the impression iPhone users are going to complain that “the new Siri still sucks” because they don’t realize that the new Siri isn’t here yet, just its facelift.

Apple Intelligence: The rest

Writing tools aren’t especially useful. I almost never found the need to select some text and change its tone or summarize it, and let’s face it, nobody’s going to proofread their emails or social media posts with this. It’s kind of a neat tech demo, the sort of thing you use a few times just to say “cool!” and then hardly ever touch again. When people think of AI and text, they think of asking a question or giving a short prompt and getting paragraphs of original text as a reply, and this isn’t that.

Fortunately, it’s also hidden behind the text-selection interface so it’s easy enough to ignore entirely. It won’t get in your way.

The new natural Photos search works great and is really useful, but hardly a game-changer. Same with the new focus mode and summaries of call recordings and transcripts.

Apple Intelligence: Waiting for the good stuff

When the general public thinks of “AI” today, they think of two things: long-form generative AI text, like having ChatGPT write your book report, and AI images.

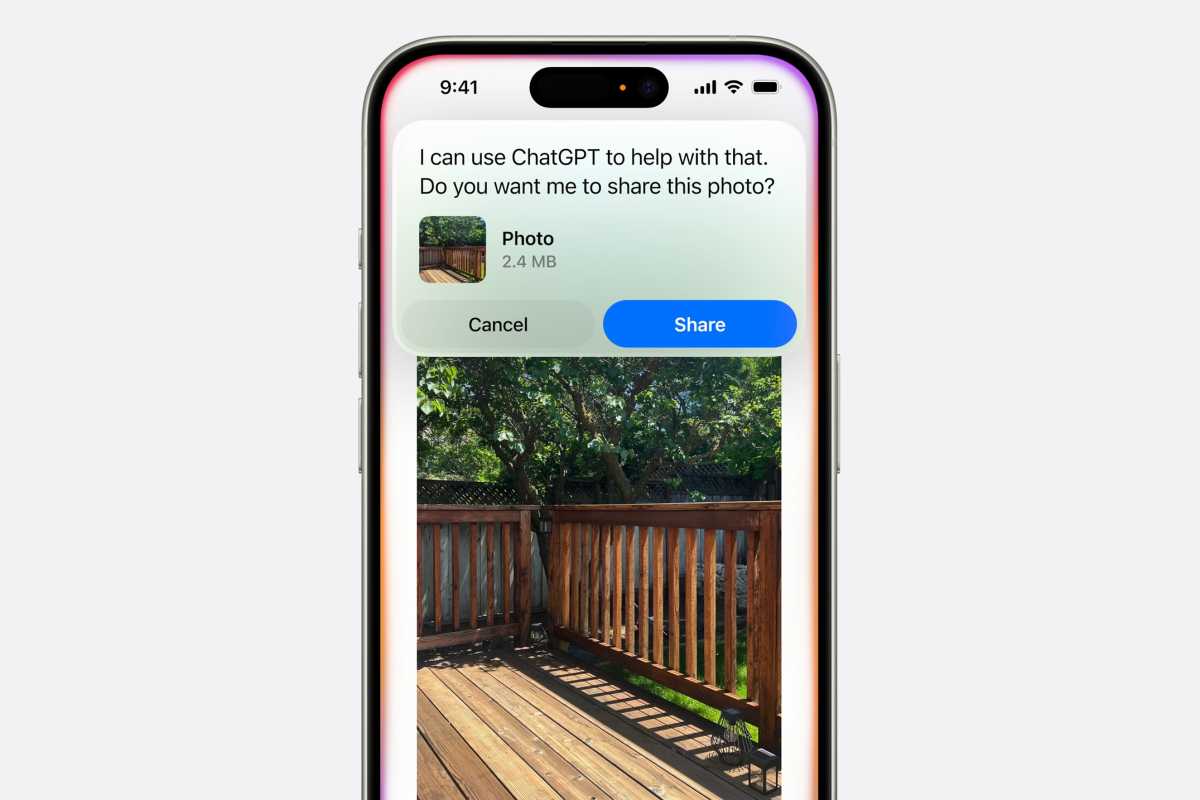

But Apple Intelligence doesn’t give you access to any of that yet. The generative image features (other than the Clean Up tool) have already made an appearance in the first iOS 18.2 beta and are expected to arrive later this year, including seamless integration with ChatGPT. The Camera Control button will also get its upgrade to summon “Visual Intelligence” in iOS 18.2 as well, making it more useful than the app launcher and shutter it is now.

The ChatGPT integration is still months away.

The ChatGPT integration is still months away.

Apple

And of course, there’s the long wait for the new Siri. Siri has been the poster child for AI since Apple bought the original Siri app and integrated it into the iPhone 4s 13 years ago. After years of not investing properly in advancing Siri’s capabilities, it seems like Apple Intelligence is finally poised to give it a big boost, but we still have five or six months from getting that on our iPhone 16s.

In other words, our first taste of Apple Intelligence is a mixed bag at best, and it’s going to be months before our new iPhone 16s do all the neat things shown in Apple’s marketing.